Introduction

The paper “FMMI: Flow Matching Mutual Information Estimation” (Butakov et al. 2025) proposes a new way to estimate MI by learning a continuous normalizing flow that transports the product of marginals into the joint distribution , and then reading MI off from the divergence of the learned velocity field.

In this post, we’ll walk through:

- The core idea: MI as a KL divergence and as a difference of entropies

- Continuous flows and the identity

- A key lemma: entropy difference as an expectation of divergence

- How Flow Matching learns the velocity field from samples

- How all of this specializes to a mutual information estimator (jFMMI)

- The Monte-Carlo / Hutchinson’s trick implementation

1. Mutual Information and the product of marginals

Let and be random vectors with joint distribution and marginals , . The mutual information is

where is the product measure (the joint distribution you’d get if and were independent).

If densities exist, we also have the familiar entropy identities

with differential entropy .

The FMMI paper will express entropy differences via flow matching, and then plug those into these identities to get MI.

2. Continuous flows and divergence

Continuous normalizing flows describe a time-dependent random variable via an ODE

where is a velocity field. Let be the density of . Under mild regularity, continuous flows satisfy the classic identity

This is equivalent to the continuity equation

and can be seen as the continuous-time version of the change-of-variables formula.

Integrating (1) along the trajectory from to gives

Taking expectations will connect divergence to entropy; that’s the next step.

3. Entropy difference = expectation of divergence (Lemma 4.1)

Define differential entropy

Differentiate w.r.t. time using (1):

Integrate from to :

If we now draw and consider the joint distribution of , this integral can be written as a single expectation:

4. Learning the velocity with Flow Matching

Of course, in practice we don’t know the true velocity . We only have samples from and .

Flow Matching (Lipman et al.) gives a way to learn from samples alone, by turning the CNF problem into a regression problem on velocities.

The trick is:

- Pick a simple conditional path between two endpoints and , e.g. linear interpolation

- This path has a known conditional velocity

Flow Matching says: instead of integrating the ODE during training, just regress a neural network on these conditional velocities. Concretely, the FM objective is:

When this regression succeeds, the marginal flow induced by transports to in the same way the “true” flow would.

This is what the paper calls FMDoE (Flow Matching Difference of Entropy): first learn by FM, then use (2) to estimate entropy differences via divergence.

5. From entropy differences to mutual information

Now we connect this to mutual information.

Recall one of the entropy forms of MI:

The idea in FMMI is:

- Build flows between carefully chosen distributions, so that entropy differences recover MI.

- Use FMDoE to estimate those entropy differences via divergence expectations.

jFMMI: joint-based estimator

The simplest variant, jFMMI, chooses:

- : product of marginals

- : joint distribution

Let . Under , , while under . Then:

- Entropy at (t=0): since and are independent under .

- Entropy at (t=1):

So the entropy difference is

Combine this with the DoE identity (2):

where is any velocity field whose flow maps into . Therefore,

This is the key theoretical identity behind jFMMI: mutual information is the negative divergence expectation of any flow that transports the product of marginals into the joint.

In practice we don’t know , so we plug in the learned :

That’s jFMMI.

6. Estimating divergence in practice: Hutchinson’s trick

To use (5), we need , without computing a full Jacobian.

Let

Hutchinson’s estimator says: if , then

So an unbiased estimate is:

- Sample ,

- Compute the scalar

- Backpropagate ,

- Form

This requires only one backward pass per point, instead of passes.

7. Monte-Carlo implementation of jFMMI

Putting everything together, FMMI implements (5) via simple Monte-Carlo:

- Sample a batch of pairs where

- (true joint),

- (shuffle Y independently to break dependence).

- Sample .

- Build the interpolated points

- Evaluate the learned velocity field .

- Estimate the divergence via Hutchinson.

- Each sample gives a stochastic MI contribution

- Average over many samples:

8. Why this is interesting

Compared to classic discriminative MI estimators, FMMI:

- avoids large batch / negative sampling issues,

- does not require directly estimating density ratios,

- scales better to high MI regimes (where joint and product distributions are very far apart),

- and leverages the modern, well-understood machinery of flow matching.

Conceptually, it reframes MI estimation as:

Learn a path that morphs independence into the true joint, and read MI as the total log-volume change along the way.

Once you see MI as entropy difference, and entropy difference as expected divergence of a flow, the whole construction becomes very natural.

Experiments

To validate the jFMMI estimator introduced in the paper, we designed a controlled numerical experiment where the true mutual information (MI) is known analytically. This allows us to measure estimation accuracy, bias, and convergence of the proposed method.

Synthetic Data Setup

We consider a simple bivariate Gaussian model:

With this construction, the mutual information has a closed-form:

We set , giving a ground-truth MI of:

We generate 30,000 samples from the joint distribution . To obtain samples from the product of marginals , we shuffle the values independently from . This gives endpoints for flow matching.

Flow Matching and Velocity Network

We train a neural velocity field with 512 hidden units and Tanh activations to match the true conditional velocity along the linear path:

The Flow Matching loss is:

The model is trained for 5,000 gradient steps with AdamW and cosine annealing.

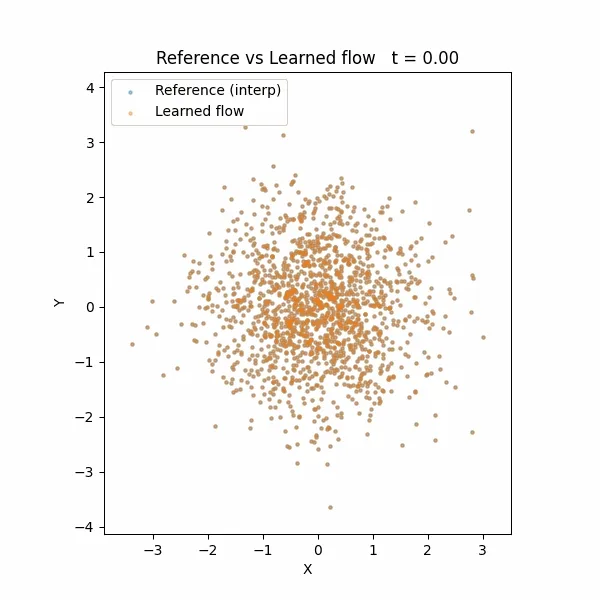

Although the FM loss exhibits mild oscillation, the flow nonetheless converges well: the learned transport visually matches the ground-truth Gaussian interpolation when animated.

Mutual Information Estimation

After training, we estimate MI using the jFMMI identity:

with divergence estimated via Hutchinson’s estimator:

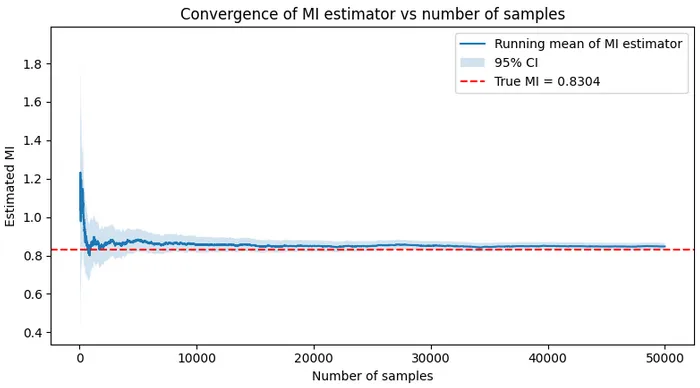

We draw 50,000 Monte-Carlo samples to approximate the expectation.

Results

The estimator converges towards the true MI:

- True MI: 0.8304

- Estimated MI (jFMMI): ≈ close to the true value

- Standard error: small (scales as )

The curve shows fast convergence but a small positive bias, expected due to imperfect learning of the velocity field. Increasing network depth significantly reduces this bias, confirming the role of expressivity.

Flow Visualization

We additionally animate the learned flow and the ground-truth flow simultaneously. The trajectories of particles under both flows almost perfectly overlap, demonstrating that the learned captures the correct transport from to .

This visual inspection confirms that Flow Matching learned the intended probability path, validating the theoretical assumptions behind jFMMI.